Model Complexity and Prediction Error in Macroeconomic Forecasting (or, Statistical Learning Theory to the Rescue!)

Attention conservation notice: 5000+ words, and many equations, about a proposal to improve, not macroeconomic models, but how such models are tested against data. Given the actual level of debate about macroeconomic policy, isn't it Utopian to worry about whether the most advanced models are not being checked against data in the best conceivable way? What follows is at once self-promotional, technical, and meta-methodological; what would be lost if you checked back in a few years, to see if it has borne any fruit?

Some months ago, we — Daniel McDonald, Mark Schervish, and I — applied for one of the initial grants from the Institute for New Economic Thinking, and, to our pleasant surprise, actually got it. INET has now put out a press release about this (and the other awards too, of course), and I've actually started to get some questions about it in e-mail; I am a bit sad that none of these berate me for becoming a tentacle of the Soros conspiracy.

To reinforce this signal, and on the general principle that there's no publicity like self-publicity, I thought I'd post something about the grant. In fact what follows is a lightly-edited version of our initial, stage I proposal, which was intended for maximal comprehensibility, plus some more detailed bits from the stage II document. (Those of you who sense a certain relationship between the grant and Daniel's thesis proposal are, of course, entirely correct.) I am omitting the more technical parts about our actual plans and work in progress, because (i) you don't care; (ii) some of it is actually under review already, at venues which insist on double-blinding; and (iii) I'll post about them when the papers come out. In the meanwhile, please feel free to write with suggestions, comments or questions.

Update, next day: And already I see that I need to be clearer. We are not trying to come up with a new macro forecasting model. We are trying to put the evaluation of macro models on the same rational basis as the evaluation of models for movie recommendations, hand-writing recognition, and search engines.

Proposal: Model Complexity and Prediction Error in Macroeconomic Forecasting

Cosma Shalizi (principal investigator), Mark Schervish (co-PI), Daniel McDonald (junior investigator)

Macroeconomic forecasting is, or ought to be, in a state of confusion. The dominant modeling traditions among academic economists, namely dynamic stochastic general equilibrium (DSGE) and vector autoregression (VAR) models, both spectacularly failed to forecast the financial collapse and recession which began in 2007, or even to make sense of its course after the fact. Economists like Narayana Kocherlakota, James Morley, and Brad DeLong have written about what this failure means for the state of macroeconomic research, and Congress has held hearings in an attempt to reveal the perpetrators. (See especially the testimony by Robert Solow.) Whether existing approaches can be rectified, or whether basically new sorts of models are needed, is a very important question for macroeconomics, and, because of the privileged role of economists in policy making, for the public at large.

Largely unnoticed by economists, over the last three decades statisticians and computer scientists have developed sophisticated methods of model selection and forecast evaluation, under the rubric of statistical learning theory. These methods have revolutionized pattern recognition and artificial intelligence, and the modern industry of data mining would not exist without it. Economists' neglect of this theory is especially unfortunate, since it could be of great help in resolving macroeconomic disputes, and determining the reliability of whatever models emerge for macroeconomic time series. In particular, these methods guarantee with high probability that the forecasts produced by models estimated with finite amounts of data will be accurate. This allows for immediate model comparisons without appealing to asymptotic results or making strong assumptions about the data generating process, in stark contrast to AIC and similar model selection criteria. These results are also provably reliable unlike the pseudo-cross validation approach often used in economic forecasting whereby the model is fit using the initial portion of a data set and evaluated on the remainder. (For illustrations of the last, see, e.g., Athanasopoulos and Vahid, 2008; Faust and Wright, 2009; Christoffel, Coenen, and Warne, 2008; Del Negro, Schorfheide, Smets, and Wouters, 2004; and Smets and Wouters, 2007. This procedure can be heavily biased: the held out data is used to choose the model class under consideration, the distributions of the test set and the training set may be different, and large deviations from the normal course of events [e.g., the recessions in 1980--82] may be ignored.)

In addition to their utility for model selection, these methods give immediate upper bounds for the worst case prediction error. The results are easy to understand and can be reported to policy makers interested in the quality of the forecasts. We propose to extend proven techniques in statistical learning theory so that they cover the kind of models and data of most interest to macroeconomic forecasting, in particular exploiting the fact that major alternatives can all be put in the form of state-space models.

To properly frame our proposal, we review first the recent history and practice of macroeconomic forecasting, followed by the essentials of statistical learning theory (in more detail, because we believe it will be less familiar). We then describe the proposed work and its macroeconomic applications.

Economic Forecasting

Through the 1970s, macroeconomic forecasting tended to rely on "reduced-form" models, predicting the future of aggregated variables based on their observed statistical relationships with other aggregated variables, perhaps with some lags, and with the enforcement of suitable accounting identities. Versions of these models are still in use today, and have only grown more elaborate with the passage of time; those used by the Federal Reserve Board of Governors contain over 300 equations. Contemporary vector autoregression models (VARs) are in much the same spirit.

The practice of academic macroeconomists, however, switched very rapidly in the late 1970s and early 1980s, in large part driven by the famous "critique" of such models by Lucas (published in 1976). He argued that even if these models managed to get the observable associations right, those associations were the aggregated consequences of individual decision making, which reflected, among other things, expectations about variables policy-makers would change in response to conditions. This, Lucas said, precluded using such models to predict what would happen under different policies.

Kydland and Prescott (1982) began the use of dynamic stochastic general equilibrium (DSGE) models to evade this critique. The new aim was to model the macroeconomy as the outcome of individuals making forward-looking decisions based on their preferences, their available technology, and their expectations about the future. Consumers and producers make decisions based on "deep" behavioral parameters like risk tolerance, the labor-leisure trade-off, and the depreciation rate which are supposedly insensitive to things like government spending or monetary policy. The result is a class of models for macroeconomic time series that relies heavily on theories about supposedly invariant behavioral and institutional mechanisms, rather than observed statistical associations.

DSGE models have themselves been heavily critiqued in the literature for ignoring many fundamental economic and social phenomena --- we find the objections to the representative agent assumption particularly compelling --- but we want to focus our efforts on a more fundamental aspect of these arguments. The original DSGE model of Kydland and Prescott had a highly stylized economy in which the only source of uncertainty was the behavior of productivity or technology, whose log followed an AR(1) process with known-to-the-agent coefficients. Much of the subsequent work in the DSGE tradition has been about expanding these models to include more sources of uncertainty and more plausible behavioral and economic mechanisms. In other words, economists have tried to improve their models by making them more complex.

Remarkably, there is little evidence that the increasing complexity of these models actually improves their ability to predict the economy. (Their performance over the last few years would seem to argue to the contrary.) For that matter, much the same sort of questions arise about VAR models, the leading alternatives to DSGEs. Despite the elaborate back-story about optimization, the form in which a DSGE is confronted with the data is a "state-space model," in which a latent (multivariate) Markov process evolves homogeneously in time, and observations are noisy functions of the state variables. VARs also have this form, as do dynamic factor models, and all the other leading macroeconomic time series models we know of. In every case, the response to perceived inadequacies of the models is to make them more complex.

The cases for and against different macroeconomic forecasting models are partly about economic theory, but also involve their ability to fit the data. Abstractly, these arguments have the form "It would be very unlikely that my model could fit the data well if it got the structure of the economy wrong; but my model does fit well; therefore I have good evidence that it is pretty much right." Assessing such arguments depends crucially on knowing how well bad models can fit limited amounts of data, which is where we feel we can make a contribution to this research.

Statistical Learning Theory

Statistical learning theory grows out of advances in non-parametric statistical

estimation and in machine learning. Its goal is to control the risk or

generalization error of predictive models, i.e., their expected inaccuracy on

new data from the same source as that used to fit the model. That is, if the

model f predicts outcomes Y from inputs X and the loss function is  (e.g., mean-squared error or negative log-likelihood), the risk of the model is

(e.g., mean-squared error or negative log-likelihood), the risk of the model is

![\[

R(f) = \mathbb{E}[\ell(Y,f(X))] ~ .

\]](../sloth/700_2.gif)

, is

the average loss over the actual training points. Because the true risk is an

expectation value, we can say that

, is

the average loss over the actual training points. Because the true risk is an

expectation value, we can say that

![\[

\widehat{R}(f) = R(f) + \gamma_n(f) ~ ,

\]](../sloth/700_4.gif)

is a mean-zero noise variable that

reflects how far the training sample departs from being perfectly

representative of the data-generating distribution. By the laws of large

numbers, for each fixed f,

is a mean-zero noise variable that

reflects how far the training sample departs from being perfectly

representative of the data-generating distribution. By the laws of large

numbers, for each fixed f,  as

as

, so, with enough data, we have a good idea of how

well any given model will generalize to new data.

, so, with enough data, we have a good idea of how

well any given model will generalize to new data.

However, economists, like other scientists, never have a single model with

no adjustable parameters fixed for them in advance by theory. (Not even the

most enthusiastic calibrators claim as much.) Rather, there is a class of

plausible models  , one of which in particular is picked

out by minimizing the in-sample loss --- by least squares, or maximum

likelihood, or maximum a posteriori probability, etc. This means

, one of which in particular is picked

out by minimizing the in-sample loss --- by least squares, or maximum

likelihood, or maximum a posteriori probability, etc. This means

![\[

\widehat{f} = \argmin_{f \in \mathcal{F}}{\widehat{R}(f)} = \argmin_{f

\in \mathcal{F}}{\left(R(f) + \gamma_n(f)\right)} ~ .

\]](../sloth/700_9.gif)

, finite-sample noise). The true risk

of

, finite-sample noise). The true risk

of  will generally be bigger than its in-sample risk,

will generally be bigger than its in-sample risk,

, precisely because we picked it to match

the data well. In doing so,

, precisely because we picked it to match

the data well. In doing so,  ends up reproducing some of

the noise in the data and therefore will not generalize well. The difference

between the true and apparent risk depends on the magnitude of the sampling

fluctuations:

ends up reproducing some of

the noise in the data and therefore will not generalize well. The difference

between the true and apparent risk depends on the magnitude of the sampling

fluctuations:

![\[

R(\widehat{f}) - \widehat{R}(\widehat{f}) \leq

\max_{f\in\mathcal{F}}|\gamma_n(f)| = \Gamma_n(\mathcal{F}) ~ .

\]](../sloth/700_14.gif)

by finding tight bounds on it while making

minimal assumptions about the unknown data-generating process; to provide

bounds on over-fitting.

by finding tight bounds on it while making

minimal assumptions about the unknown data-generating process; to provide

bounds on over-fitting.

Using more flexible models (allowing more general functional forms or

distributions, adding parameters, etc.) has two contrasting effects. On the

one hand, it improves the best possible accuracy, lowering the minimum of the

true risk R(f). On the other hand, it also increases the ability to, as it

were, memorize noise, raising  for any fixed sample size

n. This qualitative observation --- a generalization of the bias-variance

trade-off from basic estimation theory --- can be made usefully precise by

quantifying the complexity of model classes. A typical result is a confidence

bound on

for any fixed sample size

n. This qualitative observation --- a generalization of the bias-variance

trade-off from basic estimation theory --- can be made usefully precise by

quantifying the complexity of model classes. A typical result is a confidence

bound on  (and hence on the over-fitting), say that with probability

at least

(and hence on the over-fitting), say that with probability

at least  ,

,

![\[

\Gamma_n(\mathcal{F}) \leq \delta(C(\mathcal{F}), n, \eta) ~ ,

\]](../sloth/700_19.gif)

is.

is.

Several inter-related model complexity measures are now available. The

oldest, called "Vapnik-Chervonenkis

dimension," effectively counts how many different data

sets  can fit well by tuning the parameters in the model.

Another, "Rademacher complexity," directly measures the ability

of

can fit well by tuning the parameters in the model.

Another, "Rademacher complexity," directly measures the ability

of  to correlate with finite amounts of white noise

(Bartlett and

Mendelson,

2002; Mohri

and Rostamizadeh, 2009). This leads to particularly nice bounds of the

form

to correlate with finite amounts of white noise

(Bartlett and

Mendelson,

2002; Mohri

and Rostamizadeh, 2009). This leads to particularly nice bounds of the

form

![\[

\Gamma_n \leq C_n(\mathcal{F}) + \sqrt{k_1\frac{\log{k_2/\eta}}{n}} ~ ,

\]](../sloth/700_23.gif)

and

and  are calculable

constants. Yet another measure of model complexity is the stability of

parameter estimates with respect to perturbations of the data, i.e., how

much

are calculable

constants. Yet another measure of model complexity is the stability of

parameter estimates with respect to perturbations of the data, i.e., how

much  changes when small changes are made to the training

data (Bousquet

and Elisseeff,

2002; Mohri and

Rostamizdaeh, 2010). (Stable parameter estimates do not require models

which are themselves dynamically stable, and the idea could be used on systems

which have sensitive dependence on initial conditions.) The different notions

of complexity lead to bounds of different forms, and lend themselves more or

less easily to calculation for different sorts of models; VC dimension tends to

be the most generally applicable, but also the hardest to calculate, and to

give the most conservative bounds. Importantly, model complexity, in this

sense, is not just the number of adjustable parameters; there are

models with a small number of parameters which are basically inestimable

because they are so unstable, and conversely, one of the great success stories

of statistical learning theory has been devising models

("support vector machines") with

huge numbers of parameters but low and known capacity to over-fit.

changes when small changes are made to the training

data (Bousquet

and Elisseeff,

2002; Mohri and

Rostamizdaeh, 2010). (Stable parameter estimates do not require models

which are themselves dynamically stable, and the idea could be used on systems

which have sensitive dependence on initial conditions.) The different notions

of complexity lead to bounds of different forms, and lend themselves more or

less easily to calculation for different sorts of models; VC dimension tends to

be the most generally applicable, but also the hardest to calculate, and to

give the most conservative bounds. Importantly, model complexity, in this

sense, is not just the number of adjustable parameters; there are

models with a small number of parameters which are basically inestimable

because they are so unstable, and conversely, one of the great success stories

of statistical learning theory has been devising models

("support vector machines") with

huge numbers of parameters but low and known capacity to over-fit.

However we measure model complexity, once we have done so and have established

risk bounds, we can use those bounds for two purposes. One is to give a sound

assessment of how well our model will work in the future; this has clear

importance if the model's forecasts will be used to guide individual actions or

public policy. The other aim, perhaps even more important here, is to select

among competing models in a provably reliable way. Comparing in-sample

performance tends to pick complex models which over-fit. Adding heuristic

penalties based on the number of parameters, like the Akaike information

criterion (AIC), also does not solve the problem, basically because AIC

corrects for the average size of

over-fitting but ignores the variance (and higher moments). But if we

could instead use  as our penalty, we would

select the model which actually will generalize better. If we only have a

confidence limit on

as our penalty, we would

select the model which actually will generalize better. If we only have a

confidence limit on

and use that as our penalty, we select the better model with high

confidence and can in many cases calculate the extra risk that comes from model

selection (Massart, 2007).

and use that as our penalty, we select the better model with high

confidence and can in many cases calculate the extra risk that comes from model

selection (Massart, 2007).

Our Proposal

Statistical learning theory has proven itself in many practical applications, but most of its techniques have been developed in ways which keep us from applying it immediately to macroeconomic forecasting; we propose to rectify this deficiency. We anticipate that each of the three stages will require approximately a year. (More technical details follow below.)

First, we need to know the complexity of the model classes to which we wish to apply the theory. We have already obtained complexity bounds for AR(p) models, and are working to extend these results to VAR(p) models. Beyond this, we need to be able to calculate the complexity of general state-space models, where we plan to use the fact that distinct histories of the time series lead to different predictions only to the extent that they lead to different values of the latent state. We will then refine those results to find the complexity of various common DSGE specifications.

Second, most results in statistical learning theory presume that successive data points are independent of one another. This is mathematically convenient, but clearly unsuitable for time series. Recent work has adapted key results to situations where widely-separated data points are asymptotically independent ("weakly dependent" or "mixing" time series) [Meir, 2000; Mohri and Rostamizadeh, 2009, 2010; Dedecker et al., 2007]. Basically, knowing the rate at which dependence decays lets one calculate how many effectively-independent observations the time series has and apply bounds with this reduced, effective sample size. We aim to devise model-free estimates of these mixing rates, using ideas from copulas and from information theory. Combining these mixing-rate estimates with our complexity calculations will immediately give risk bounds for DSGEs, but not just for them.

Third, a conceptually simple and computationally attractive alternative to using learning theory to bound over-fitting is to use an appropriate bootstrap for dependent data to estimate generalization error. However, this technique currently has no theoretical basis, merely intuitive plausibility. We will investigate the conditions under which bootstrapping can yield non-asymptotic guarantees about generalization error.

Taken together, these results can provide probabilistic guarantees on a proposed forecasting model's performance. Such guarantees can give policy makers reliable empirical measures which intuitively explain the accuracy of a forecast. They can also be used to pick among competing forecasting methods.

Why This Grant?

As we said, there has been very little use of modern learning theory in economics (Al-Najjar, 2009 is an interesting, but entirely theoretical, exception), and none that we can find in macroeconomic forecasting. This is an undertaking which requires both knowledge of economics and of economic data, and skill in learning theory, stochastic processes, and prediction theory for state-space models. We aim to produce results of practical relevance to forecasting, and present them in such a way that econometricians, at least, can grasp their relevance.

If all we wanted to do was produce yet another DSGE, or even to improve the approximation methods used in DSGE estimation, there would be plenty of funding sources we could turn to, rather than INET. We are not interested in making those sorts of incremental advances (if indeed proposing a new DSGE is an "advance"). We are not even particularly interested in DSGEs. Rather, we want to re-orient how economic forecasters think about basic issues like evaluating their accuracy and comparing their models --- topics which should be central to empirical macroeconomics, even if DSGEs vanished entirely tomorrow. Thus INET seems like a much more natural sponsor than institutions with more of a commitment to existing practices and attitudes in economics.

[Detailed justification of our draft budget omitted]

Detailed exposition

In what follows, we provide a more detailed exposition of the technical content of our proposed work, including preliminary results. This is, unavoidably, rather more mathematical than our description above.

The initial work described here builds mainly on the work of Mohri and Rostamizadeh, 2009, which offers a handy blueprint for establishing data-dependent risk bounds which will be useful for macroeconomic forecasters. (Whether this bound is really optimal is another question we are investigating.) The bound that they propose has the general form

![\[

R(\widehat{f}) - \widehat{R}(\widehat{f}) \leq C_n(\mathcal{F}) +

\sqrt{k_1\frac{\log k_2(\eta)}{m(n)}} ~ .

\]](../sloth/700_29.gif)

, in

this case what is called the "Rademacher complexity", and a term which

depends on the desired confidence level (through

, in

this case what is called the "Rademacher complexity", and a term which

depends on the desired confidence level (through  ), and the amount of

data n used to choose

), and the amount of

data n used to choose  . For many problems, the calculation of

the complexity term is well known in the literature. However, this is not the

case for state-space models. The final term depends on the mixing behavior of

the time series (assumed to be known). In the next sections we highlight some

progress we have made toward calculating the model complexity for state-space

models and estimating the mixing rate from data. We then apply the bound to a

simple example attempting to predict interest rate movements using an AR

model. Finally, we discuss the logic behind using the bootstrap to estimate a

bound for the risk. All of these results are new and require further research

to make them truly useful to economic forecasters.

. For many problems, the calculation of

the complexity term is well known in the literature. However, this is not the

case for state-space models. The final term depends on the mixing behavior of

the time series (assumed to be known). In the next sections we highlight some

progress we have made toward calculating the model complexity for state-space

models and estimating the mixing rate from data. We then apply the bound to a

simple example attempting to predict interest rate movements using an AR

model. Finally, we discuss the logic behind using the bootstrap to estimate a

bound for the risk. All of these results are new and require further research

to make them truly useful to economic forecasters.

Model complexity

As mentioned earlier, statistical learning theory provides several ways of

measuring the complexity of a class of predictive models. The results we are

using here rely on what is known as the Rademacher complexity, which can be thought of as

measuring how well the model can (seem to) fit white noise. More specifically,

when we have a class  of prediction functions f,

the Rademacher complexity of the class is

of prediction functions f,

the Rademacher complexity of the class is

![\[

C_n(\mathcal{F}) \equiv 2 \mathbf{E}_{X}\left[\mathbf{E}_{Z}\left[ \sup_{f\in

\mathcal{F}}{\left|\frac{1}{n} \sum_{i=1}^{n}{Z_i f(X_i) } \right|}

\right]\right] ~ ,

\]](../sloth/700_34.gif)

are a

sequence of random variables, independent of each other and everything else,

and equal to +1 or -1 with equal probability. (These are known in the field as

"Rademacher random variables". Very similar results exist for other sorts of

noise, e.g., Gaussian white noise.) The term inside the

supremum,

are a

sequence of random variables, independent of each other and everything else,

and equal to +1 or -1 with equal probability. (These are known in the field as

"Rademacher random variables". Very similar results exist for other sorts of

noise, e.g., Gaussian white noise.) The term inside the

supremum,  , is

the sample covariance between the noise Z and the predictions of a

particular model f. The Rademacher complexity takes the largest

value of this sample correlation over all models in the class, then averages

over realizations of the noise. Omitting the final average over the

data X gives the "empirical Rademacher complexity", which can be

shown to converge very quickly to its expected value as n grows. The

final factor of 2 is conventional, to simplify some formulas we will not repeat

here.

, is

the sample covariance between the noise Z and the predictions of a

particular model f. The Rademacher complexity takes the largest

value of this sample correlation over all models in the class, then averages

over realizations of the noise. Omitting the final average over the

data X gives the "empirical Rademacher complexity", which can be

shown to converge very quickly to its expected value as n grows. The

final factor of 2 is conventional, to simplify some formulas we will not repeat

here.

The idea, stripped of the technicalities required for actual implementation,

is to see how well our models could seem to fit outcomes which were

actually just noise. This provides a kind of baseline against which to assess

the risk of over-fitting, or failing to generalize. As the sample

size n grows, the sample correlation

coefficients  will approach 0 for each particular f, by the law of large numbers;

the over-all Rademacher complexity should also shrink, though more slowly,

unless the model class is so flexible that it can fit absolutely anything, in

which case one can conclude nothing about how well it will predict in the

future from the fact that it performed well in the past.

will approach 0 for each particular f, by the law of large numbers;

the over-all Rademacher complexity should also shrink, though more slowly,

unless the model class is so flexible that it can fit absolutely anything, in

which case one can conclude nothing about how well it will predict in the

future from the fact that it performed well in the past.

One of our goals is to calculate the Rademacher complexity of stationary state-space models. [Details omitted.]

Mixing rates

Because time-series data are not independent, the number of data

points n in a sample S is no longer a good

characterization of the amount of information available in that sample. Knowing

the past allows forecasters to predict future data points to some degree, so

actually observing those future data points gives less information

about the underlying data generating process than in the case of iid data. For

this reason, the sample size term must be adjusted by the amount of dependence

in the data to determine the effective sample size  which can be much less than the true sample size n. These sorts of

arguments can be used to show that a typical data series

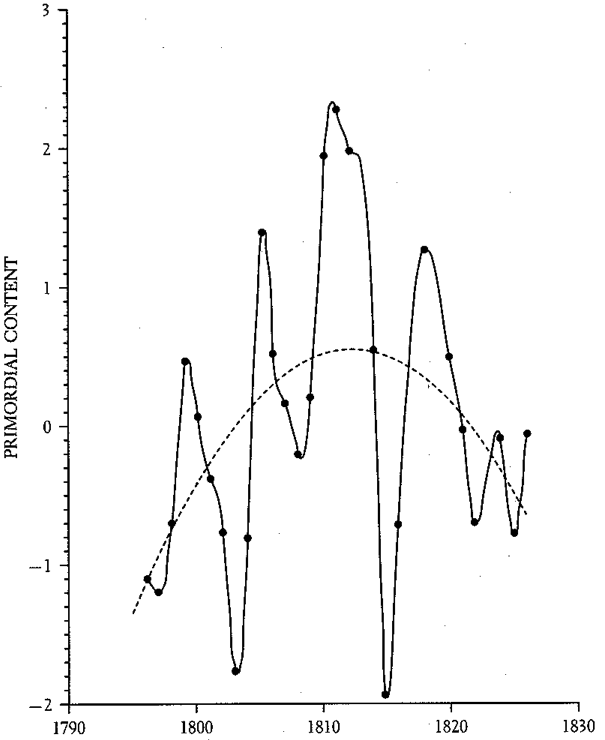

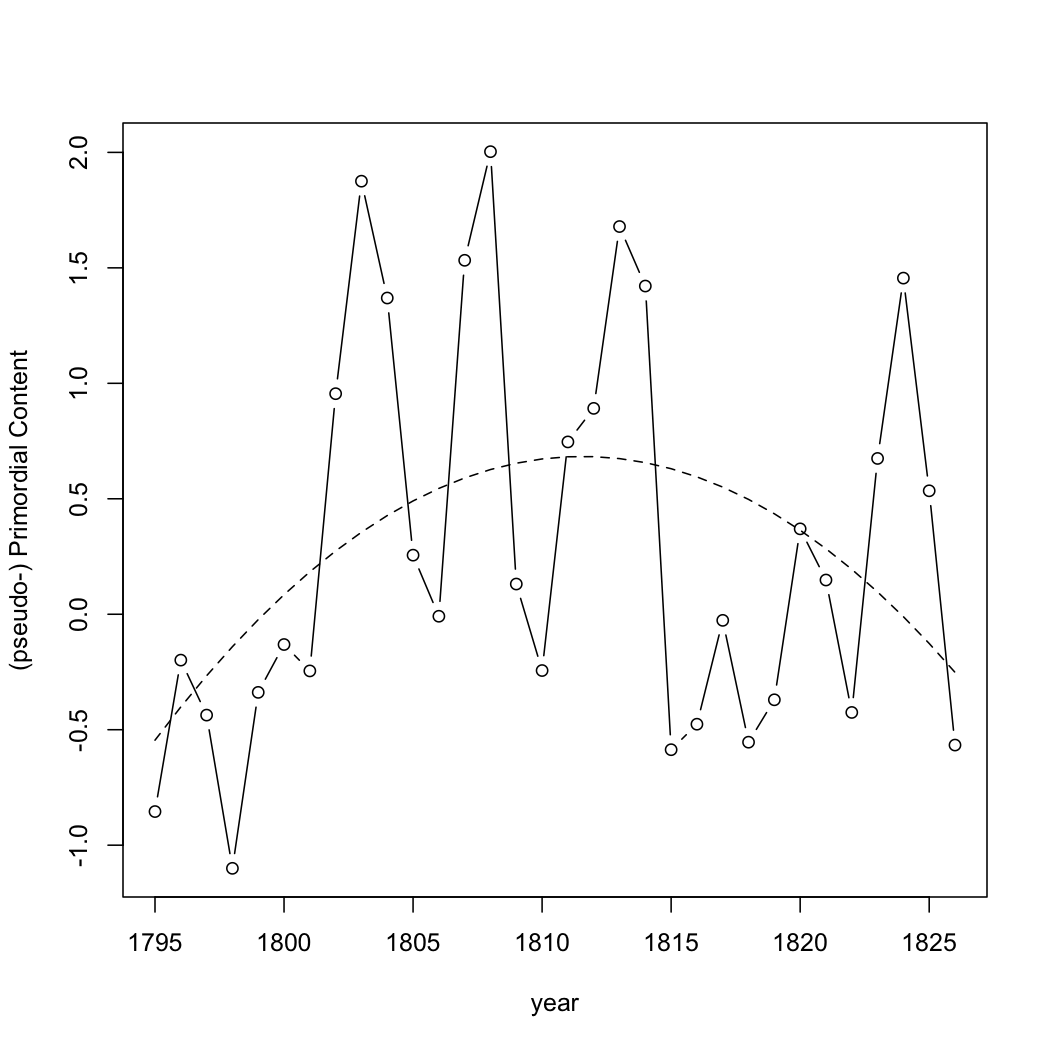

used for macroeconomic forecasting, detrended growth rates of US GDP from 1947

until 2010, has around n=252 actual data points, but an effective

sample size of

which can be much less than the true sample size n. These sorts of

arguments can be used to show that a typical data series

used for macroeconomic forecasting, detrended growth rates of US GDP from 1947

until 2010, has around n=252 actual data points, but an effective

sample size of  . To determine the effective sample

size to use, we must be able to estimate the dependence of a given time

series. The necessary notion of dependence is called the mixing rate.

. To determine the effective sample

size to use, we must be able to estimate the dependence of a given time

series. The necessary notion of dependence is called the mixing rate.

Estimating the mixing rates of time-series data is a problem that has not

been well studied in the

literature. According

to Ron Meir, "as far as we are aware, there is no efficient practical

approach known at this stage for estimation of mixing parameters". In this

case, we need to be able to estimate a quantity known as

the  -mixing rate.

-mixing rate.

Definition. Let(Herebe a stationary sequence of random variables or stochastic process with joint probability law

. For

, let

, the

-field generated by the observations between those times. Let

be the restriction of

to

with density

,

be the restriction of

to

with density

, and

the restriction of

to

with density

. Then the

-mixing coefficient at lag

is

is the total variation distance, i.e., the

largest difference between the probabilities that

is the total variation distance, i.e., the

largest difference between the probabilities that  and

and  assign to a

single event. Also, to simplify notation, we stated the definition assuming

stationarity, but this is not strictly necessary.)

assign to a

single event. Also, to simplify notation, we stated the definition assuming

stationarity, but this is not strictly necessary.)

The stochastic process X is called "  -mixing"

if

-mixing"

if  as

as  ,

meaning that the joint probability of events which are widely separated in time

increasingly approaches the product of the individual probabilities ---

that X is asymptotically independent.

,

meaning that the joint probability of events which are widely separated in time

increasingly approaches the product of the individual probabilities ---

that X is asymptotically independent.

The form of the definition of the  -mixing coefficient

suggests a straightforward though perhaps naive procedure: use nonparametric

density estimation for the two marginal distributions as well as the joint

distribution, and then calculate the total variation distance by numerical

integration. This would be simple in principle, and could give good results;

however, one would need to show not just that the procedure was consistent, but

also learn enough about it that the generalization error bound could be

properly adjusted to account for the additional uncertainty introduced by using

an estimate rather than the true quantity. Initial numerical experiments on

the naive are not promising, but we are pursuing a number of more refined

ideas.

-mixing coefficient

suggests a straightforward though perhaps naive procedure: use nonparametric

density estimation for the two marginal distributions as well as the joint

distribution, and then calculate the total variation distance by numerical

integration. This would be simple in principle, and could give good results;

however, one would need to show not just that the procedure was consistent, but

also learn enough about it that the generalization error bound could be

properly adjusted to account for the additional uncertainty introduced by using

an estimate rather than the true quantity. Initial numerical experiments on

the naive are not promising, but we are pursuing a number of more refined

ideas.

Bootstrap

An alternative to calculating bounds on forecasting error in the style of statistical learning theory is to use a carefully constructed bootstrap to learn about the generalization error. A fully nonparametric bootstrap for time series data uses the circular bootstrap reviewed in Lahiri, 2003. The idea is to wrap the data of length n around a circle and randomly sample blocks of length q. There are n possible blocks, each starting with one of the data points 1 to n. Politis and White (2004) give a method for choosing q. The following algorithm proposes a bootstrap for bounding the generalization error of a forecasting method.- Take the time series, call it X. Fit a model

, and calculate the in-sample risk,

, and calculate the in-sample risk,  .

.

- Repeat for B times:

-

Bootstrap a new series Y from X, which is several times longer

than X. Call the initial segment, which is as long as X,

.

.

- Fit a model to this,

, and calculate its in-sample risk,

, and calculate its in-sample risk,

.

.

- Calculate the risk of

on the rest of Y. Because

the process is stationary and Y is much longer than X, this should

be a reasonable estimate of the generalization error of

on the rest of Y. Because

the process is stationary and Y is much longer than X, this should

be a reasonable estimate of the generalization error of  .

.

- Store the difference between the in-sample and generalization risks.

-

Bootstrap a new series Y from X, which is several times longer

than X. Call the initial segment, which is as long as X,

- Find the

percentile of the distribution of over-fits. Add

this to

percentile of the distribution of over-fits. Add

this to  .

.

While intuitively plausible, there is no theory, yet, which says that the results of this bootstrap will actually control the generalization error. Deriving theoretical results for this type of bootstrap is the third component of our grant application.

Manual trackback: Economics Job Market Rumors [!]

Posted by crshalizi at December 02, 2010 12:55 | permanent link

![\[ \beta(m) \equiv {\left\|\mathbb{P}_t \otimes \mathbb{P}_{t+m} -

\mathbb{P}_{t \otimes t+m}\right\|}_{TV} = \frac{1}{2}\int{\left|f_t f_{t+m} -

f_{t\otimes t+m}\right|}

\]](../sloth/700_85.gif)

![\[

\mathbf{E}\left[C|Y=y\right] = 0

\]](http://bactra.org/sloth/679_1.gif)

![\[

\mathbf{E}\left[C\right] = \mathbf{E}\left[\mathbf{E}\left[C|Y=y\right]\right]

\]](http://bactra.org/sloth/679_2.gif)

![\[

\frac{1}{n}\sum_{t=1}^{n}{f(X_t)} \rightarrow f_1

\]](http://bactra.org/sloth/679_3.gif)

![\[

\lim_{n\rightarrow\infty}{\frac{1}{n}\sum_{t=1}^{n}{P_t(A)}} = P(A)

\]](http://bactra.org/sloth/679_4.gif)

![\[

\lim_{n\rightarrow\infty}{\frac{1}{n}\sum_{t=0}^{n-1}{P_1(A \cap T^{-t} B)}} = P_1(A) P(B)

\]](http://bactra.org/sloth/679_5.gif)

![\[

A_n = \frac{1}{n}\sum_{t=1}^{n}{X_t}

\]](../sloth/668_1.gif)

![\[

\mathbf{E}[A_n] = \frac{1}{n}\sum_{t=1}^{n}{\mathbf{E}[X_t]} = \frac{n}{n}m = m

\]](../sloth/668_2.gif)

![\begin{eqnarray*}

\mathrm{Var}[A_n] & = & \mathbf{E}[A_n^2] - m^2\\

& = & \frac{1}{n^2}\mathbf{E}\left[{\left(\sum_{t=1}^{n}{X_t}\right)}^2\right] - m^2\\

& = & \frac{1}{n^2}\mathbf{E}\left[\sum_{t=1}^{n}{\sum_{s=1}^{n}{X_t X_s}}\right] - m^2\\

& = & \frac{1}{n^2}\sum_{t=1}^{n}{\sum_{s=1}^{n}{\mathbf{E}\left[X_t X_s\right]}} - m^2\\

& = & \frac{1}{n^2}\sum_{t=1}^{n}{\sum_{s=1}^{n}{ c_{s-t} + m^2}} - m^2\\

& = & \frac{1}{n^2}\sum_{t=1}^{n}{\sum_{s=1}^{n}{ c_{s-t}}}\\

& = & \frac{1}{n^2}\sum_{t=1}^{n}{\sum_{h=1-t}^{n-t}{ c_h}}

\end{eqnarray*}](../sloth/668_3.gif)

![\[

\mathrm{Var}[A_n] = \frac{v}{n} + \frac{2}{n^2}\sum_{t=1}^{n-1}{\sum_{h=1}^{n-t}{c_{h}}}

\]](../sloth/668_4.gif)

![\[

\sum_{h=1}^{\infty}{|c_h|} < \infty

\]](../sloth/668_6.gif)

,

where T is called the "(auto)covariance time", "integrated

(auto)covariance time" or "(auto)correlation time". We are assuming a finite

correlation time. (Exercise: Suppose that

,

where T is called the "(auto)covariance time", "integrated

(auto)covariance time" or "(auto)correlation time". We are assuming a finite

correlation time. (Exercise: Suppose that  , as

would be the case for a first-order linear autoregressive model, and

find T. This confirms, by the way, that the assumption of finite

correlation time can be satisfied by processes with non-zero

correlations.)

, as

would be the case for a first-order linear autoregressive model, and

find T. This confirms, by the way, that the assumption of finite

correlation time can be satisfied by processes with non-zero

correlations.)

![\begin{eqnarray*}

\mathrm{Var}[A_n] & = & \frac{v}{n} + \frac{2}{n^2}\sum_{t=1}^{n-1}{\sum_{h=1}^{n-t}{c_{h}}}\\

& \leq & \frac{v}{n} + \frac{2}{n^2}\sum_{t=1}^{n-1}{v T}\\

& = & \frac{v}{n} + \frac{2(n-1) vT}{n^2}\\

& \leq & \frac{v}{n} + \frac{2 vT}{n}\\

& = & \frac{v}{n}(1+ 2T)

\end{eqnarray*}](../sloth/668_9.gif)

![\[

\mathrm{Pr}\left(|A_n - m| > \epsilon\right) \leq \frac{\mathrm{Var}[A_n]}{\epsilon^2} \leq \frac{v}{\epsilon^2} \frac{2T+1}{n}

\]](../sloth/668_10.gif)

![\[

\lim_{n\rightarrow 0}{\frac{1}{n}\sum_{h=1}^{n}{c_h}} = 0

\]](../sloth/668_11.gif)